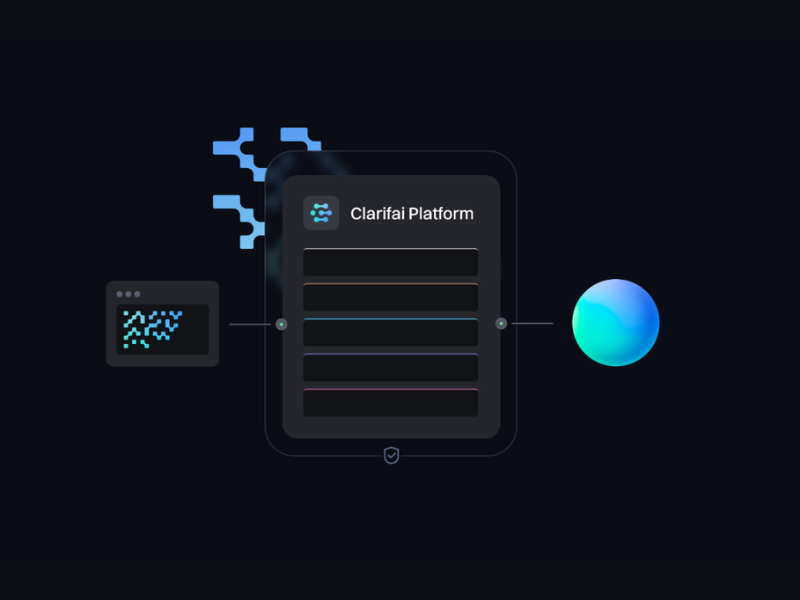

Clarifai, a global leader in AI and pioneer of the full-stack AI platform, yesterday (on 30 January 2025) announced that several distilled versions of DeepSeek models are available on the Clarifai platform and users can try them for free for a limited time.

The open source DeepSeek models on the platform are not the one that’s been put to the test against a highly-touted OpenAI model, but they nonetheless mark the beginning of a new frontier in AI that users will no doubt rush to explore.

The DeepSeek models, from the Chinese startup by the same name, focus on “inference-time computing,” a technique that emphasizes multi-step reasoning and iterative refinement during the inference process to generate more accurate and contextually relevant responses. This approach reduces the massive computational costs when compared with other leading AI models, improves efficiency during model training time, and shifts the computational bottleneck from training to inference.

.

.

To deploy such models efficiently, companies need tools that scale with their needs so that inference doesn’t become a bottleneck. Clarifai’s Inference Compute Orchestration provides the infrastructure to harness the full potential of this wave of AI.

“The DeepSeek models highlight the importance of optimized model inferencing as the new frontier in AI and underscore the need for tools that can optimize inference at scale,” said Matt Zeiler, Ph.D., Founder and CEO of Clarifai. “Clarifai’s Inference Compute Orchestration addresses this need, offering a unified platform to manage and deploy all AI models efficiently, including open source ones like DeepSeek—on every cloud, on-premise, or on edge devices with the same set of tools.”

In addition, the fact that such powerful DeepSeek models are built with open source software, meaning anyone can use and contribute to them, innovations will move faster than if the models were owned by this or that company, like so many others.

.

Clarifai’s Compute Orchestration will help organizations navigate the model inferencing era with:

- Optimized Inference: GPU fractioning packs multiple models on the same GPU, and traffic-based autoscaling rises and drops with traffic, reducing costs without sacrificing performance.

- Control Center: A unified view for monitoring and managing AI compute resources, models, and deployments across multiple environments. With better insight and control over AI infrastructures, companies can prevent runaway costs.

- Enterprise-Grade Features: Provide required security and oversight so enterprises can deploy AI in regulated industries.

.

To start experimenting with DeepSeek models, you have to visit the official website of Clarifai.

For similar news, please refer

Asteria and Moonvalley Announce The First Clean AI Model for Hollywood

iFoto unveils Revolutionary AI Clothes Changer Feature

Swiss startup Risklick launches AI-based software for medical device clinical trials